Introducing Tay, Princeton's new AI assistant for students

Note: I know there used to be a Microsoft chatbot called Tay that went rogue. That’s not gonna happen here.

Last week, I launched a chatbot to help Princeton students answer any questions they have about campus. Since then, we’ve handled over 1300 conversations across topics like academics, social life, and events.

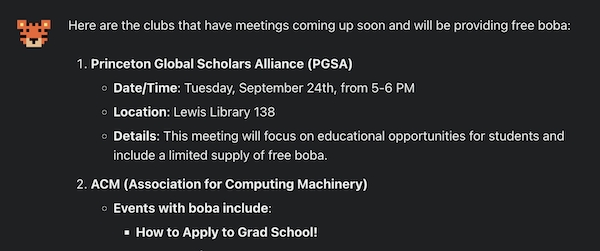

Tay taps directly into the informal knowledge that students have about campus. It reads listserv emails so it can tell you exactly what events are happening, what clubs are meeting, and whether there is free boba being offered somewhere that day (a very important use case).

It also has specific tooling related to eating clubs, the Princeton equivalent of frats, which most people are obsessed about joining. We have specific information about the vibe of each club, questions that you might get asked, and general procedures for how joining and deliberations look like.

Of course, we didn’t miss out on all the official information either. Tay can refer to any webpage on the Princeton domain and has integrations with Princeton Courses to get class data and student reviews.

Technical design

The chatbot generally operates on a RAG pipeline build on top of OpenAI and MongoDB Atlas Search. The database has roughly 150K embedded document chunks. Some key components of the pipeline include:

- Query Enhancer - Before performing retrieval, the query is rewritten with an LLM call to include relevant context from the ongoing conversation. The prompt had to include several specific instructions in order to maximize performance within the college student domain. For instance, we specify ways for the LLM to handle acronyms and other shorthand language that is common among college students. In many cases, we would instruct the model to not expand unknown acronyms because of a high probability of hallucination.

- Hybrid Search - We use reciprocal rank fusion (RRF) to combine results from full text search and vector search, both of which are done easily through MongoDB. Hybrid search plays a key role in responding to queries with terms that are not natively known to the LLM (e.g. acronyms, slang, and department codes) and is necessary for good performance.

- Tool Use - As of writing this post, Tay has 8 tools (with an option to not use a tool). Some tools invoke external APIs, such as the Princeton Courses website or dining hall menus. Other tools exist to assist with retrieval through prefiltering. For example, if a user asks a question relating to upcoming club events, the system struggles to return the right results from the total set of documents in the database. Instead, we create a tool that is specific to accessing email data, and we instruct the model to invoke it for the upcoming club event use case. Internally, the tool prefilters for only email documents before doing hybrid search. This pattern has proven effective for all sorts of distinctions that are poorly understood by the LLM (e.g. regular club vs eating club). Also, a

catchalltool that searches all documents is useful for queries with unknown terms and no context (e.g. “What is PUCP?”). There are some edge cases where the quality of the response is limited by the tool delination.

You can ask for events with free food and boba in case you're hungry.

Safety considerations

The chatbot generally relies on information that is published by official sources. We’ve made sure not to include information that is confidential or should not be spread because of campus organization policy (even if it can be reasonably found with a Google search).

One area where we expected issues were around eating clubs. Most online content describing eating clubs portray them in a negative light, which isn’t the case in reality. However, many of the same sources contain valuable informal knowledge that should be available to students. We decided to edit these particular sources by removing the biased and inappropriate sections.

Another pattern we noticed was how the unequal amount of information available about each club’s practices influenced the chatbot’s responses. When asked general questions about eating clubs, the chatbot leaned toward referencing clubs that had the most presence in the dataset or which sent the most emails. However, we thought it was fine to keep this behavior because it was the eating clubs’ decision to withhold information rather than a flaw in our approach.

During initial testing among a small number of users, we found that the chatbot gave reasonable answers to questions relating to controversial or opinionated topics. The responses reflected broad consensus and factual information, enabling students to make their own judgements.